New Deep Learning Method

AUTOMATES ANTARCTIC GLACIER GROUNDING LINE MAPPING

By Alex Keimig

Natalya Ross |

University of Houston researchers have validated a method that uses deep learning to automate the mapping of glacier grounding lines in Antarctica. This innovative approach could greatly enhance the accuracy and efficiency of tracking glacier retreat, a critical indicator of sea-level rise.

The study, led by Ph.D. student Natalya Ross from the Department of Civil and Environmental Engineering and co-authored by Pietro Milillo, assistant professor of Civil and Environmental Engineering, was recently published in the high-impact journal Remote Sensing of Environment.

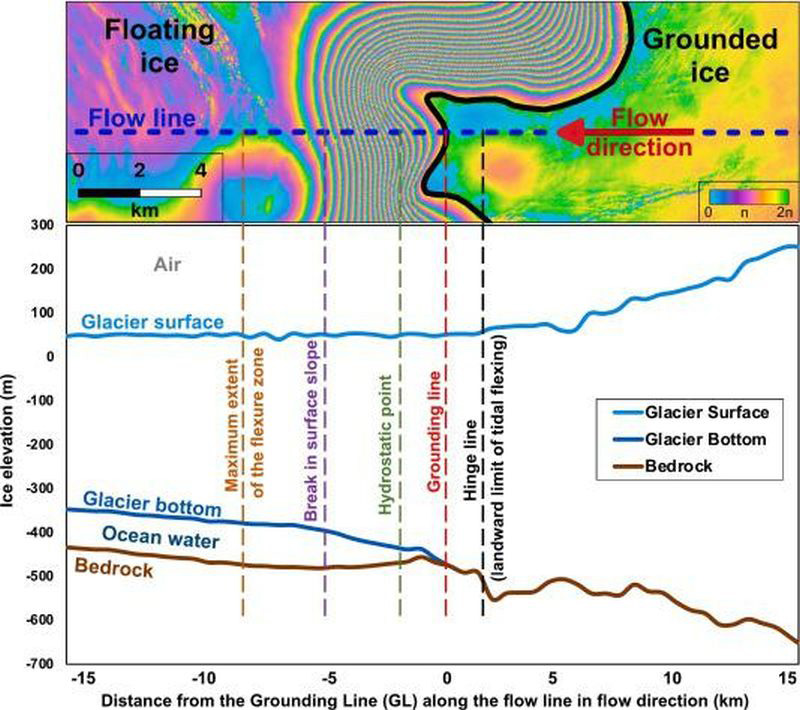

Grounding lines mark the transition point between the grounded and floating sections of glaciers, and their precise identification is crucial for understanding glacier behavior and the potential impacts of ice loss on global sea levels.

Traditionally, this mapping has relied on labor-intensive manual analysis of satellite data. However, as the volume of available data continues to increase, there is an urgent need for automated methods.

“We developed a deep learning model that outperforms existing techniques by automating the detection of grounding lines using X-band radar data,” Ross said. “This method will allow us to analyze vast datasets more quickly and accurately, helping us understand how Antarctic glaciers are evolving.”

The team used advanced satellite imaging to visualize the glacier’s surface and the bedrock beneath it. |

The research team tested their method on five key glaciers in Antarctica, including the Moscow University Glacier, which has shown significant retreat in recent decades. The findings revealed that the glacier’s grounding line has been retreating at a rate of 340 meters per year since 1996, while other glaciers in the study showed no signs of retreat.

“This research underscores the importance of monitoring grounding lines, especially as we anticipate accelerated ice loss due to climate change,” Milillo said. “The ability to automate this process means we can keep pace with the growing volume of satellite data, ensuring timely analysis and response.”

The study, developed in collaboration with Luigi Dini at the Italian Space Agency through an international agreement, provided critical insights into the complex behavior of grounding zones, which fluctuate with tides. The findings also emphasized the need for continued technological advancements in satellite data analysis.

This research is supported by the NASA Cryosphere Program and the Italian Space Agency’s COSMO-SkyMed mission, which supplied the high-resolution radar data used in the study. For more information about this research and its implications for glacier monitoring, visit the University of HoustonGeosensing Lab.

Press release by staff in the lab of Pietro Milillo, with editing by the Communications Department of the Cullen College of Engineering.

Ph.D. student Voelker leads in transforming

REMOTE SENSING-BASED EARTHQUAKE ASSESSMENT PROCEDURES

Brandon Voelker |

A doctoral candidate from the University of Houston’s Department of Civil and Environmental Engineering has co-authored a transformative study that redefines approaches to assessing earthquake damage using cutting-edge remote sensing technologies.

The findings, now featured in the IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, offer valuable perspectives for enhancing disaster response strategies in the wake of the 2023 Turkey-Syria earthquakes.

Brandon Voelker, working closely with his advisor, Pietro Milillo, Ph.D., Assistant Professor of Civil and Environmental Engineering at UH, led the Earthquake Engineering Field Investigation Team’s (EEFIT) mission remote sensing team. The research utilized Synthetic Aperture Radar (SAR) and high-resolution optical imaging to map the structural damage across the earthquake-impacted regions of southeastern Turkey, providing a rapid, large-scale view of the destruction.

The February 2023 earthquakes wreaked havoc, claiming thousands of lives and devastating infrastructure across the region. Voelker’s work underscores the crucial role of remote sensing in facilitating swift damage assessments — a vital component for directing emergency relief and planning recovery efforts.

By evaluating a range of damage mapping methodologies, the study sheds light on disparities in data accuracy, underscoring the importance of harmonized validation practices.

“Integrating satellite data with direct field observations is a game-changer for disaster response,” Voelker said. “It enables teams on the ground to zero in on the most affected areas, ensuring their efforts are both efficient and effective.”

In collaboration with an international consortium of researchers, the study harnessed satellite data from the European Space Agency and processed by the NASA’s Jet Propulsion Laboratory and the German Aerospace Center, among other sources. The team employed a hybrid approach, blending remote sensing insights with ground-based data to produce detailed, actionable damage maps. These maps proved invaluable for directing field surveyors to a variety of sites, capturing a nuanced picture of the region’s resilience and structural challenges.

Milillo, who co-authored the study, highlighted the research’s broader impact.

“Brandon’s contributions have established a new standard in the field of disaster damage assessment through remote sensing,” Milillo said. “This work paves the way for faster, more reliable disaster response efforts, potentially making a life-saving difference in future emergencies.”

This achievement represents a notable advancement for the University of Houston’s Geosensing Lab and its Civil and Environmental Engineering Department, further cementing their leadership in the field of remote sensing and disaster resilience research.

The study, conducted in collaboration with an international team of scientists, included partners from various prestigious institutions, such as Delft University of Technology, University College London, Bundeswehr University Munich, the German Aerospace Center (DLR), and the University of Cambridge, UK. This diverse expertise enriched the research, enabling a comprehensive analysis of earthquake damage through a multidisciplinary lens.

a) A building in Antakya, Turkey was rated as having “very heavy damage” during a survey in March 2024, which means it was seriously affected. |

(b) A satellite image shows the same building. The building’s outline is marked in red on this image. However, despite its severe condition, currently adopted AI algorithms assessed it as having no visible damage. |

Press release by staff in the lab of Pietro Milillo, with editing by the Communications Department of the Cullen College of Engineering.

Akash Awasthi, ECE Ph.D. Student,

CONDUCTING CRITICAL RESEARCH IN AI & IMAGE ANALYSIS

By Alex Keimig

Akash Awasthi at the Ames Research Center |

Electrical and Computer Engineering senior Ph.D. candidate Akash Awasthi is making a name for himself. With a paper nominated for the 2024 IEEE International Symposium on Biomedical Engineering’s Best Poster Award, a second paper selected for oral presentation at the IEEE 26th International Workshop on Multimedia Signal Processing, and a third co-authored paper with Houston Methodist published in Nature Scientific Reports, his recent research efforts in artificial intelligence, computer vision and biomedical image analysis have been receiving significant attention from the academic community.

“Decoding radiologists’ intentions: a novel system for accurate region identification in chest X-ray image analysis”, 2024 IEEE International Symposium on Biomedical Imaging — Best Poster Award

“This nomination was a very prestigious honor, and my work was mostly about using AI models to assist radiologists in disease diagnosis. We don’t want to develop an AI model as a standalone system, or one which can replace the radiologists or doctors. We want to develop a system that can be a collaborative resource with the radiologist, because medical diagnosis is a critical task — there is a lot of trust involved there, and we cannot just completely hand that over to a machine. We want the doctor to be able to learn from the AI, and for the AI to be able to learn from the doctor in order to help with diagnosis and enhance training programs in medical science.

“Anomaly Detection in Satellite Videos using Diffusion Models”, IEEE 26th International Workshop on Multimedia Signal Processing — Oral Presentation

“This work was in collaboration with NASA Ames. We were trying to use diffusion models — basically generative AI — in detecting anomalous events in the livestreams of NASA satellite video feeds. NASA collects satellite data from all over the US, and we wanted to use that high-resolution satellite video and some kind of generative AI technique that can detect anomalous events like a fire in the forest, or even fog or a tornado — any kind of anomaly event in the live stream of video. We made the focus a theoretical problem of anomaly detection rather than doing any specialized focus on a specific type of event, and that theoretical framework can ultimately — hopefully — be put anywhere with any problem and solve it in a particular way.”

“Deep learning-derived optimal aviation strategies to control pandemics”, Nature Scientific Reports

“We wanted to develop a specific framework using the edge graph neural network and see how we could use that to understand the non-linear dynamics of pandemics. This work specifically concerned COVID-19, but we developed a very generalized framework which can be applied to any potential future pandemics, or even past pandemics, to understand the factors which really drive pandemic spread. Because my work is more into developing very specialized algorithms for scientific applications, this was really interesting work.”

“My research is mostly about large multimodal models, and how we can use current large language models and large multimodal models for certain scientific applications — specifically medical data and radiology,” Awasthi said. “For example, we’re working on using all of the current AI tools available to develop collaborative systems, for both diagnostic and training purposes, for radiologists.”

In addition to medical imaging, Awasthi also works with the Department of Energy’s Argonne National Laboratory using generative AI to solve problems related to weather and wind data, and has been invited by NASA Ames to present his work on novel approaches to urban mapping and the precise classification of urban elements like trees and buildings with combined LIDAR and aerial imagery.

“AI has a lot of power,” he said, “and I would say it’s as intelligent as it is unintelligent. We have to develop an understanding of how we can really make sense out of these models for useful tasks, because there’s a lot of capability there, but we have to instruct it in the proper direction to really make use of it.”

While Awasthi’s recent above-mention projects appear to span a broad spectrum of topics — enhanced biomedical radiological image analysis, the automatic detection of anomalous events via satellite feed, and a framework for understanding the dynamics of pandemic spread and growth — they all boil down to one essential focus: algorithms.

“My particular area of research is more into algorithmic sciences and even basic algorithms: how can we specialize our foundational models for different tasks? Every application and dataset has a different group of challenges and complexities to address,” Awasthi added. “If we can harness the power of these big models and personalize them for our specific use cases, we will be able to automate many, many tasks and make them more efficient. These models won’t be making big scientific discoveries for us, but they can absolutely be used to automate certain tasks and find patterns in data. AI can run the calculations that allow humans to use reasoning and innovation to move us forward.”