Prosthetic legs combine mind and muscle to travel advanced tech terrain

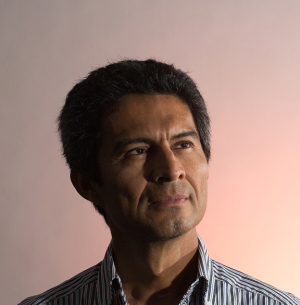

The National Science Foundation has awarded Jose Luis "Pepe" Contreras-Vidal, electrical and computer engineering professor at UH Cullen College, with two grants to develop state-of-the-art upper and lower prosthetic limb technology.

In 2011, he received almost $400,000 to develop a neural interface that controls grasping functions in prosthetic hands exclusively with brain signals generated by amputees. Most recently, he and his collaborator earned $1 million to build next-generation myoelectric prosthetic legs with interfaces that harness brain signals of amputees to augment control of movement already boosted by muscle signals to varying degrees in existing versions.

Normal limb movement is controlled by the brain, which sends electrical signals down the spinal cord and through the peripheral nerves to muscles in the limbs. The most advanced prosthetic devices on the market manipulate these electrical signals for movement with myoelectric technology. When amputees generate thoughts about moving their phantom limbs, electrical signals travel to remaining muscle in their residual limbs, where electrodes communicate the signals to amplifiers in their artificial limbs. Computer programs interpret the amplified signals to instruct battery-powered motorized movement of their prostheses.

Prostheses can restore degrees of normal limb function, boost confidence and ease symptoms of depression in amputees, yet affordability of the most advanced artificial limbs and limited residual limb muscle to aid in operation of the devices are concerns for some. Myoelectric prostheses are not necessarily effective options for amputees whose amputation sites are very near their trunks. Insufficient amounts of remaining muscle emit unclear electrical signals, and surface limitations on the remaining limb make electrode placement difficult. Furthermore, those who are fitted with myoelectric prosthetic limbs can experience varying degrees of performance based on such individual characteristics at their amputation sites.

Consequently, some lower-limb amputees find navigating uneven terrains and crowds of people with their artificial legs mentally and physically exhausting. Pepe’s aim is to change that by directly tapping the brains of amputees. With brain-machine interfaces, he is exploring ways for them to expend less energy by leveraging their brain signals to optimize navigation with myoelectric prosthetic limbs.

“We’re trying to add another layer of control coming directly from the brain,” Pepe said. “We want to harness the early intent signals from the brain and combine them with intention detected at the muscle, which comes later, to predict what the user wants to do with the leg, taking into account the environment.”

The project merges Pepe’s expertise in neural interfaces with the myoelectric expertise of Helen Huang, associate professor in the joint biomedical engineering department of North Carolina State University and the University of North Carolina. Huang met Pepe at a workshop several years ago and began following his groundbreaking work controlling movement of exoskeletons and upper-limb prosthetics entirely with brain signals. She approached him about this collaborative project because she considers him a leader in brain-machine interface research.

“It’s nice to collaborate with another group because we have complementary expertise, and the students get cross-trained in these areas,” Pepe said.

The team’s mind-controlled artificial limb project is literally in motion at the UH National Center for Human Performance in the Texas Medical Center. A lower-limb amputee fitted with a myoelectric leg recently donned a skullcap embedded with electrodes and more attached to his residual limb to navigate a track with ramps and stairs. As he walked and climbed the course, Pepe’s research assistants, Justin Brantley and Fangshi Zhu, and NCSU collaborators followed pushing a rolling cart with a computer tethered by wires to the electrodes recording the subject’s brain and muscle signals.

“Muscle is limited with amputees, so there are limited amounts of muscle from which you can record signals, compared to brains with full function – though brain signals are noisier because they control other human functions,” Huang said. “So it’s interesting scientifically to think about … how both signals from muscle and brain can work together to make the decoding more accurate.”

By the end of the four-year project, the research team intends to gain a better understanding of mechanisms used by brains to plan, program and execute movement in both natural and prosthetic legs. They hope to find that amputees can train their brains to assimilate their artificial limbs into their bodies by practicing with versions that operate and feel as much like normal legs as possible. Finally, they intend to validate a prototype of the artificial leg in a small group of lower-limb amputees and to proceed to larger clinical trials necessary for their product to reach the prostheses market.

“It’s always our intention not to leave the technology in the lab, but to move it forward to end users, and that takes a lot of time and financial resources,” Pepe said. “After we reach the first milestone, we need to assess the benefits in a larger population of amputees so they can be prescribed by doctors.”

Artificial hands grasp mind control for the first time

Human hands hold forks, toothbrushes, pens, playing cards and other hands; feel textures, shapes, pain and pressure; throw Frisbees, baseballs and footballs; and swing hammers and shovels, yet they go easily unnoticed and underappreciated in busy everyday lives.

Without hands, endless numbers of professionals, including musicians, painters, carpenters and auto mechanics, would lose their abilities to pursue passions and to earn livelihoods. For upper-limb amputees, life changes drastically when they cannot perform routine functions such as grasping, pressing, feeling and gesturing.

“We know the human hand is a powerful and beautiful part of the body that can do many things – play the piano, write, paint, feel the environment – an amazing piece of engineering and design,” Pepe said. “We would like to provide that capability back to upper limb amputees who have lost their hands due to injury.”

Movement of prosthetic hands controlled entirely by minds of amputees with a noninvasive technique like electroencephalography, EEG, was thought entirely impossible until Pepe became interested in the idea. Earlier this year, upper-limb amputees wearing electrode-embedded skullcaps used nothing but their thoughts to grasp a range of objects with their multi-fingered prosthetic hands in Pepe’s lab.

“The long-term goal for all of this work is to have noninvasive – no extra surgeries, no extra implants – ways to control a dexterous robotic device,” said Robert Armiger, project manager for amputee research at John Hopkins University, which uses TMR with its modular prosthetic arms, in a New York Times article in May.

In the same article, author Emma Cott wrote, “In the future, researchers envision a kind of cap with sensors that an amputee or paralyzed person could wear that would feed information about brain activity to the robotic arm.”

Voila. The future began at the University of Houston earlier this year and published in the Frontiers in Neuroscience journal in March.

“We have been able to demonstrate the feasibility of recording and extracting motor intent from brain activity from the outside using EEG, and we have used such signals to control powered prosthetic hands,” Pepe said.

Electrodes in the skullcap collect patterns of signals generated by neurons in the brain when amputees think about normal hand functions such as grasping, tapping or pointing. Each of the patterns correlates with a specific movement and also provides information about intent. An algorithm developed by Pepe interprets the intent and sends the information to a prosthetic hand that performs the intended movement for the amputee.

Next year, Pepe expects to unfold a full system for mind-controlled artificial hands that includes a sense of touch for amputees. Research assistant Andrew Paek is developing methods to detect and send sensory feedback, such as amounts of pressure applied to grasped objects, through sensors in four of the amputee’s five prosthetic fingertips.

“Until now, our amputee volunteers have used only visual feedback to grasp, so they see what they’re doing with the prosthetic hand,” Pepe said. “But they also need to feel what the fingers are doing to the object because that’s very important to gain full control of the hand, so we are adding the sense of touch to the neural interface.”